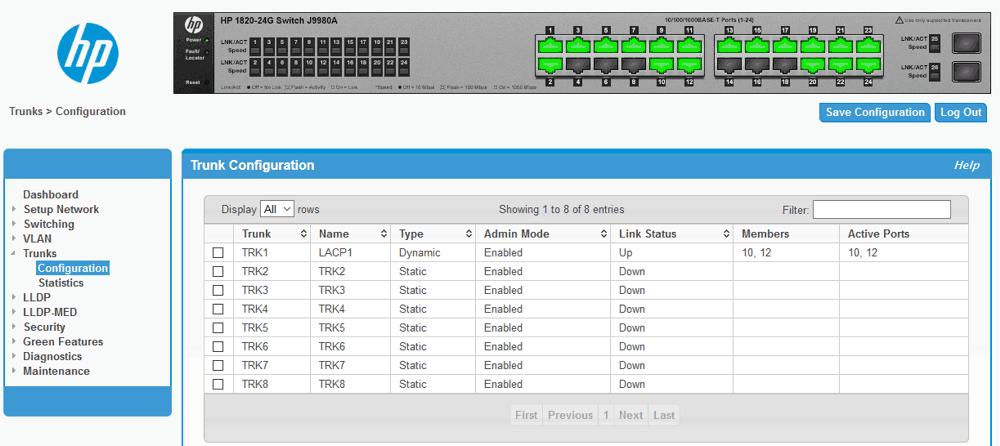

Debian Bonding LACP con Switch HP 1820-24G

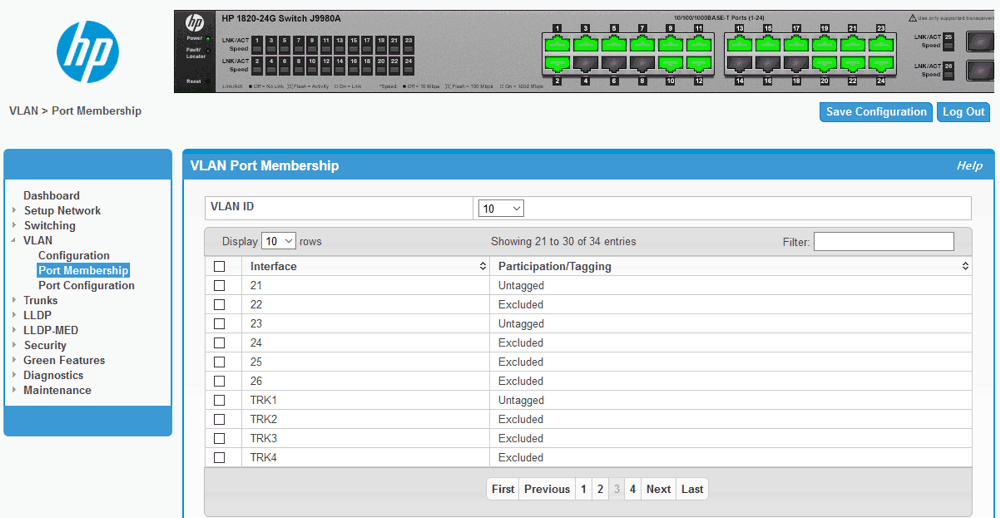

Una volta configurato lo switch HP 1820-24G Switch J9980A per avere il port trunking tra le porte selezionate ed eventualmente aver assegnato il trunk alla VLAN si passa alla configurazione del sistema.

Attenzione che HP chiama Trunk dinamico la modalità LACP.

Ho trovato in alcuni howto questa specifica.

Male non dovrebbe fare.

echo "mii" >> /etc/modules

Si passa alla configurazione dell file /etc/network/interfaces in questo modo.

auto lo

iface lo inet loopback

iface enp2s0f0 inet manual

post-up ifconfig enp2s0f0 txqueuelen 5000 && ifconfig enp2s0f0 mtu 9000

iface enp2s0f1 inet manual

post-up ifconfig enp2s0f1 txqueuelen 5000 && ifconfig enp2s0f1 mtu 9000

auto bond0

iface bond0 inet manual

bond-slaves enp2s0f0 enp2s0f1

bond-mode 802.3ad

bond-miimon 100

bond-downdelay 200

bond-updelay 200

bond-lacp-rate 1

bond-xmit-hash-policy layer2+3

post-up ifconfig bond0 mtu 9000 && ifconfig bond0 txqueuelen 5000

auto vmbr0

iface vmbr0 inet static

address 172.16.5.10

netmask 255.255.255.0

bridge_ports bond0

bridge_stp off

bridge_fd 0

post-up ifconfig vmbr0 mtu 9000 && ifconfig vmbr0 txqueuelen 5000

A questo punto dopo il riavvio il Bond/Trunk risulterà attivo.

# dmesg

igb 0000:02:00.0 enp2s0f0: igb: enp2s0f0 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: None bond0: link status up for interface enp2s0f0, enabling it in 0 ms bond0: link status definitely up for interface enp2s0f0, 1000 Mbps full duplex bond0: Warning: No 802.3ad response from the link partner for any adapters in the bond bond0: first active interface up! vmbr0: port 1(bond0) entered blocking state vmbr0: port 1(bond0) entered forwarding state IPv6: ADDRCONF(NETDEV_CHANGE): vmbr0: link becomes ready igb 0000:02:00.1 enp2s0f1: igb: enp2s0f1 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: None bond0: link status up for interface enp2s0f1, enabling it in 200 ms bond0: link status definitely up for interface enp2s0f1, 1000 Mbps full duplex

# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer2+3 (2)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 200

Down Delay (ms): 200

802.3ad info

LACP rate: fast

Min links: 0

Aggregator selection policy (ad_select): stable

System priority: 65535

System MAC address: 90:e2:ba:74:28:f8

Active Aggregator Info:

Aggregator ID: 1

Number of ports: 2

Actor Key: 9

Partner Key: 54

Partner Mac Address: 70:10:6f:71:3a:80

Slave Interface: enp2s0f0

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 90:e2:ba:74:28:f8

Slave queue ID: 0

Aggregator ID: 1

Actor Churn State: none

Partner Churn State: none

Actor Churned Count: 0

Partner Churned Count: 0

details actor lacp pdu:

system priority: 65535

system mac address: 90:e2:ba:74:28:f8

port key: 9

port priority: 255

port number: 1

port state: 63

details partner lacp pdu:

system priority: 32768

system mac address: 70:10:6f:71:3a:80

oper key: 54

port priority: 128

port number: 12

port state: 61

Slave Interface: enp2s0f1

MII Status: up

Speed: 1000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: 90:e2:ba:74:28:f9

Slave queue ID: 0

Aggregator ID: 1

Actor Churn State: none

Partner Churn State: none

Actor Churned Count: 0

Partner Churned Count: 0

details actor lacp pdu:

system priority: 65535

system mac address: 90:e2:ba:74:28:f8

port key: 9

port priority: 255

port number: 2

port state: 63

details partner lacp pdu:

system priority: 32768

system mac address: 70:10:6f:71:3a:80

oper key: 54

port priority: 128

port number: 10

port state: 61

andrea

- Published in Networking, Sistemistica, Tips & Tricks

Centos 7 Cluster PCS

Installo due VM in VirtualBox identiche (4C, 4Gb RAM, 32Gb HDD) con una versione minimale di Centos 7 aggiornata.

Aggiorno il kernel a 4.16 dal repository elrepo.

# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org # rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm # yum --disablerepo="*" --enablerepo="elrepo-kernel" list available # yum --enablerepo=elrepo-kernel install kernel-ml kernel-ml-devel

Edito il file /etc/default/grub

GRUB_TIMEOUT=5 GRUB_DEFAULT=0 GRUB_DISABLE_SUBMENU=true GRUB_TERMINAL_OUTPUT="console" GRUB_CMDLINE_LINUX="rd.lvm.lv=centos/root rd.lvm.lv=centos/swap crashkernel=auto rhgb quiet" GRUB_DISABLE_RECOVERY="true"

Ed eseguo per attivare la modifica precedente.

# grub2-mkconfig -o /boot/grub2/grub.cfg

Aggiungo qualche pacchetto.

# yum install epel-release.noarch # yum update # yum group install "Development Tools" # yum install bzip2 net-tools psmisc nmap acpid unzip

Modifico /etc/hosts sui due nodi.

192.168.254.83 nodeA.netlite.it nodeA 192.168.254.84 nodeB.netlite.it nodeB

Installo i numerosi pacchetti necessari al cluster.

# yum install pcs fence-agents-all -y

Aggiungo le regole di firewalling.

# firewall-cmd --permanent --add-service=high-availability # firewall-cmd --add-service=high-availability # firewall-cmd --list-service dhcpv6-client ssh high-availability

Modifico la password dell’utente hacluster.

# passwd hacluster Changing password for user hacluster. New password: BAD PASSWORD: The password is shorter than 8 characters Retype new password: passwd: all authentication tokens updated successfully.

Avvio i servizi.

# systemctl start pcsd.service # systemctl enable pcsd.service

Autorizzo i nodi del cluster.

# pcs cluster auth nodeA.netlite.it nodeB.netlite.it Username: hacluster Password: nodeA.netlite.it: Authorized nodeB.netlite.it: Authorized

Inizializzo il cluster.

# pcs cluster setup --start --name ClusterTest nodeA.netlite.it nodeB.netlite.it Destroying cluster on nodes: nodeA.netlite.it, nodeB.netlite.it... nodeA.netlite.it: Stopping Cluster (pacemaker)... nodeB.netlite.it: Stopping Cluster (pacemaker)... nodeB.netlite.it: Successfully destroyed cluster nodeA.netlite.it: Successfully destroyed cluster Sending 'pacemaker_remote authkey' to 'nodeA.netlite.it', 'nodeB.netlite.it' nodeA.netlite.it: successful distribution of the file 'pacemaker_remote authkey' nodeB.netlite.it: successful distribution of the file 'pacemaker_remote authkey' Sending cluster config files to the nodes... nodeA.netlite.it: Succeeded nodeB.netlite.it: Succeeded Starting cluster on nodes: nodeA.netlite.it, nodeB.netlite.it... nodeB.netlite.it: Starting Cluster... nodeA.netlite.it: Starting Cluster... Synchronizing pcsd certificates on nodes nodeA.netlite.it, nodeB.netlite.it... nodeA.netlite.it: Success nodeB.netlite.it: Success Restarting pcsd on the nodes in order to reload the certificates... nodeA.netlite.it: Success nodeB.netlite.it: Success

Abilito il cluster.

# pcs cluster enable --all

Visualizzo lo stato.

# pcs cluster status Cluster Status: Stack: corosync Current DC: nodeA.netlite.it (version 1.1.16-12.el7_4.8-94ff4df) - partition with quorum Last updated: Tue Apr 3 13:02:21 2018 Last change: Tue Apr 3 13:00:43 2018 by hacluster via crmd on nodeA.netlite.it 2 nodes configured 0 resources configured PCSD Status: nodeA.netlite.it: Online nodeB.netlite.it: Online

Status dettagliati.

# pcs status Cluster name: ClusterTest WARNING: no stonith devices and stonith-enabled is not false Stack: corosync Current DC: nodeA.netlite.it (version 1.1.16-12.el7_4.8-94ff4df) - partition with quorum Last updated: Tue Apr 3 13:02:53 2018 Last change: Tue Apr 3 13:00:43 2018 by hacluster via crmd on nodeA.netlite.it 2 nodes configured 0 resources configured Online: [ nodeA.netlite.it nodeB.netlite.it ] No resources Daemon Status: corosync: active/enabled pacemaker: active/enabled pcsd: active/enabled

Disabilito i device stonith (meglio non farlo ma per test è ok).

# pcs property set stonith-enabled=falseIn caso sia necessario attivare i devices qui c’è un buon punto di partenza STONITH.

Configuro un FS.

# pcs resource create httpd_fs Filesystem device="/dev/mapper/vg_apache-lv_apache" directory="/var/www" fstype="ext4" --group apache

Configuro un VIP.

# pcs resource create httpd_vip IPaddr2 ip=192.168.12.100 cidr_netmask=24 --group apache

Configuro un servizio.

# firewall-cmd --add-service=http # firewall-cmd --permanent --add-service=http # pcs resource create httpd_ser apache configfile="/etc/httpd/conf/httpd.conf" statusurl="http://127.0.0.1/server-status" --group apache

Disabilita un nodo.

# pcs cluster stop nodeA.netlite.it

Comandi utili.

# pcs resource move apache nodeA.netlite.it # pcs resource stop apache nodeB.netlite.it # pcs resource disable apache nodeB.netlite.it # pcs resource enable apache nodeB.netlite.it # pcs resource restart apache

andrea

- Published in Non categorizzato, Sistemistica, Tips & Tricks, Virtualizzazione